How I Learned and Used Ansible for Automation

Automating server updates with Ansible simplifies multi-server maintenance. This post covers my learning process, setup, and a practical playbook.

Introduction

Keeping multiple servers up to date and properly maintained can be a challenging task. To make this process more efficient, I explored Ansible to automate system updates and maintenance tasks across multiple servers. This blog post details my learning process, the setup I used, and a practical playbook to manage updates seamlessly.

Setting Up the Environment

I used a Proxmox server as the host, where I created an Ansible control node. From this control node, I configured multiple managed nodes that would receive commands remotely. Here’s how I structured my setup:

- Accessing the Ansible Control Node

- I SSH’d into the Ansible controller from my workstation.

- Setting Up SSH Key-Based Authentication

- Instead of repeatedly entering passwords, I added my SSH fingerprint to each managed node’s

~/.ssh/authorized_keys.

- Instead of repeatedly entering passwords, I added my SSH fingerprint to each managed node’s

- Creating a Dedicated Ansible User

- On each node, I created a separate sudo-enabled user called

ansible, ensuring it had the necessary permissions to execute administrative tasks.

- On each node, I created a separate sudo-enabled user called

Configuring Ansible Inventory

To define the hosts, I created an inventory file (inventory.ini), where I grouped all the target machines under [galaxy]:

inventory.ini

[galaxy]

192.168.254.175

192.168.254.176

192.168.254.183

192.168.254.179

192.168.254.107This inventory file allowed me to run commands across all listed nodes efficiently.

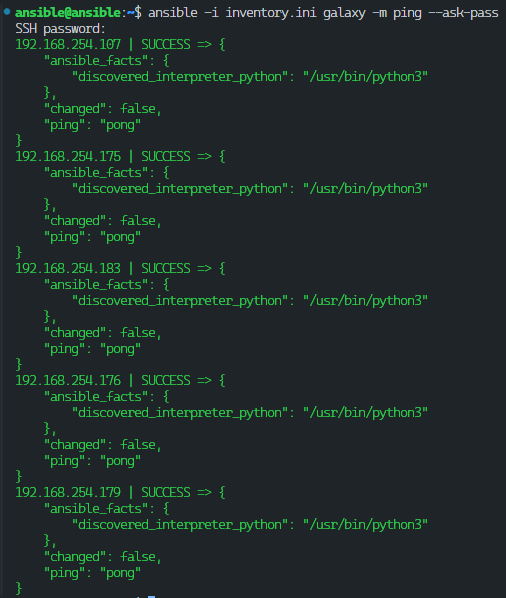

Checking Connection to Nodes

Before running the playbook, I first verified the connection to all nodes using the following command:

ansible -i inventory.ini galaxy -m ping --ask-pass

This ensures that all nodes are reachable and properly configured before proceeding with automation tasks.

Writing an Ansible Playbook

Once my environment was set up, I wrote a simple Ansible playbook (galaxy-update.yml) to update and upgrade system packages across all hosts. This playbook ensures that:

- The package list is updated.

- All packages are upgraded to the latest versions.

- Unnecessary packages are removed.

- Cached packages are cleaned up.

Here’s the playbook:

galaxy-update.yml

- name: Update and upgrade system packages

hosts: all # Target all nodes in the inventory

become: yes # Run tasks with sudo privileges

tasks:

- name: Update package lists

apt:

update_cache: yes

- name: Upgrade all packages

apt:

upgrade: dist # Equivalent to 'apt upgrade -y'

autoremove: yes # Remove unnecessary packages

autoclean: yes # Clean up cached packagesRunning the Playbook

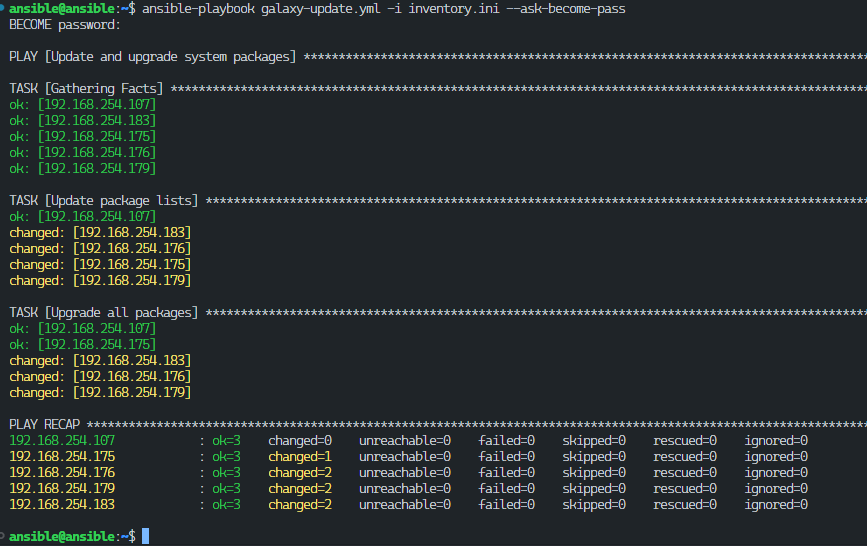

To execute this playbook, I simply ran the following command:

ansible-playbook galaxy-update.yml -i inventory.ini --ask-become-pass

Ansible then logged into each node, executed the update commands, and provided real-time feedback.

Lessons Learned

TipAnsible’s Agentless Nature: Unlike Puppet or Chef, Ansible doesn’t require an agent on remote machines, making setup much easier.

TipSimplified Automation: A single playbook can handle updates across multiple servers effortlessly.

TipSecurity Best Practices: Using a dedicated

ansibleuser with limited sudo privileges improves security.

TipEfficiency: Running updates manually on multiple nodes can be tedious; Ansible streamlines this process significantly.

Conclusion

Learning Ansible has been a game-changer in how I manage multiple servers. By leveraging simple YAML playbooks, I can automate repetitive administrative tasks efficiently. If you’re looking to automate system maintenance, Ansible is a powerful and beginner-friendly tool worth exploring.